Does a day go by when you’re not reading about AI in your news feed? Artificial intelligence is certainly becoming more mainstream as newsworthy applications like ChatGPT, voice assistants like Siri, and recommendation engines like Netflix and Amazon continue to entrench themselves in our day-to-day.

Beyond personal convenience, we’re increasingly seeing a role for AI in augmenting productivity and decision-making in our professional lives. Every industry is experiencing this transformation right now, and AI models come in many different specialized forms to address the wide range of challenges to be solved for.

As new AI solutions enter the field of reproductive medicine, clinicians and embryologists are pushed to consider how best to decipher what a “good” AI solution looks like – How is it built? How can you assess its performance and impact on your clinical practice? And how easily can this innovative technology be integrated into your clinical practice?

In this blog series, we’ll introduce you to the AI solution that we created at Future Fertility. We’ll break down the high-level steps required to develop a high-quality machine learning model for medical image analysis, using real examples that are relevant to oocytes and reproductive medicine.

Clinic stakeholders who are interested in learning more about the underlying analysis and development of these emerging AI technologies will find this series particularly helpful as they evaluate the quality of the models and the role they can play in their clinics.

WHAT IS MACHINE LEARNING AND DEEP LEARNING?

Machine learning is a subfield of artificial intelligence, which is broadly defined as the capability of a machine to imitate intelligent human behaviour.

Deep learning is a type of machine learning often used to classify images and detect features or objects in the image. It gained broad adoption in medical imaging to classify and predict health outcomes. This form of artificial intelligence first gained momentum in the medical field to process X-rays, CT scans, and MRIs, which contain a wealth of visual information that can be difficult for a human to process.

Deep learning uses a type of model called an artificial neural network that receives pixel-level data and is able to extract low- and high-level features of the images like edges, gradients, shapes, and textures – including those potentially undetectable to human eyes. By relating these features to health outcome data, neural networks can learn to predict outcomes for new images that it has never seen before. Already, technology like this has made strides in detecting various cancers or vision complications, enabling healthcare professionals to make earlier and more-informed treatment decisions.

Future Fertility is the first company to apply deep learning to the assessment of oocyte quality for reproductive medicine. Our technology processes images of oocytes to predict the likelihood that they will form a blastocyst. This is a perfect application for neural networks because, to date, researchers have not been able to determine features that impact quality that are visible to the human eye under a microscope.

Article 1 in this series will explore the first two steps in developing a deep learning model:

- • Step 1: Define the problem that the deep learning model will solve for

- • Step 2: Collect and prepare the data to be used to train the model

Article 2 will explore:

- • Step 3: Choose a deep learning model architecture

- • Step 4: Design the model

Article 3 will explore:

- • Step 5: Train the model

- • Step 6: Evaluate the model

STEP 1: DEFINE THE PROBLEM

A comprehensive and specific problem definition is an extremely important first step in building a deep learning model because it ensures that the model and its performance measurement approaches are designed to address the specific nuance of the problem at hand. This upfront planning pays off in delivering a model with higher performance and better interpretability.

This is particularly relevant for the reproductive field. Given all the unique nuances and variables that factor into the fertility journey at each stage, a clinical lens is critical to ensuring the right variables are considered, measured and isolated for in the model development.

What challenge within the fertility journey are we solving with machine learning?

Despite the oocyte’s incredible contributions to embryo development, there is currently no standardized scoring system to assess oocyte quality. Embryologists can review the morphological features of an oocyte in the lab and note any differences they observe, but studies haven’t been able to consistently link these differences to how well an embryo will develop.

Furthermore, the standard of care in approximating oocyte quality is to use population health statistics (based on patient age and the number of mature oocytes retrieved) to predict the likelihood of pregnancy success. This incorrectly assumes that all patients in the same group have the same health backgrounds and will achieve the same outcomes, while also assuming that all oocytes from one person are of equal quality.

What is the specific task that the model needs to complete to address this challenge?

Oocyte quality can be assessed by understanding an individual oocyte’s ability to reach key milestones on the path to a live birth. However, once fertilization occurs, additional factors beyond the oocyte may impact success as the process advances, making it difficult to isolate oocyte quality as the lead factor contributing to later development stages.

Blastocyst development was chosen as our model’s primary outcome in order to best control for factors external to the oocyte that may also impact pregnancy success (e.g., uterine environment, ploidy status, embryo transfer technique, challenges associated with carrying the pregnancy to term). It’s also notable that the highest rate of attrition between IVF stages occurs between fertilization and blastocyst formation. As such, oocytes that make it to the usable embryo stage are deemed high-quality by our model. Furthermore, pre-implantation embryo development during IVF takes place entirely within the fertility lab, adding an extra layer of control through standardized lab protocols.

With that, our machine learning model is trained to analyze 2D images of oocytes to predict their likelihood of forming a blastocyst.

STEP 2: COLLECT AND PREPARE THE DATA TO BE USED TO TRAIN AND TEST THE MODEL

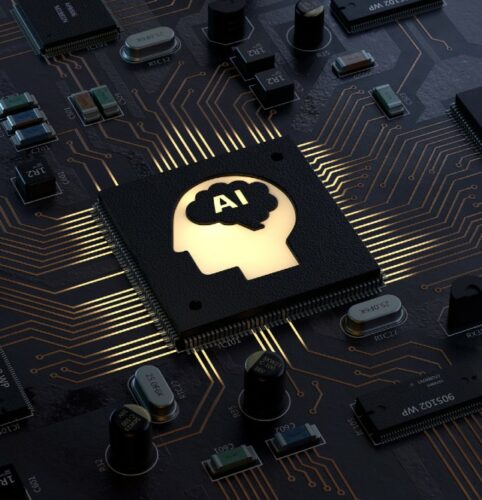

Once data is collected, cleaned and validated it’s split up into at least three separate subsets that are used to develop a machine learning model:

- • Training dataset: This is the set of data we use to teach the model how to perform a specific task (i.e., predict whether an oocyte will form a blastocyst or not, based on its image).

- • Validation dataset: This is a smaller set of data that we use to see how well the model is learning. After each round of training, the model’s performance is validated on this subset of data that was not seen during this iteration. The results of this validation are used to adjust parameters of the model before the next training round.

- • Test dataset: This dataset is used to determine how well the model can perform on new, unseen data. We only use this data at the very end of the model’s development, after all training and validation is complete.

What’s involved in building a strong dataset to develop the model?

If we want the neural network to be able to predict whether an oocyte develops into a blastocyst, then we need to show it many examples of oocyte images that did form a blastocyst and many that did not. This enables the model to learn relationships between image features and outcomes that will help it make good predictions in the future.

Gathering a strong dataset is key to building a high-performing and unbiased model. This step involves collecting and labelling oocyte images and their outcome data. (i.e., Did the oocyte on this image form a blastocyst?).

Good input data is crucial for good output. Here are some key characteristics to look for in a great training dataset:

LARGE SIZE:

A deep learning model is truly “learning”, and it starts this learning from scratch. Generally speaking, the more data the model trains with, the better it can predict outcomes over time (all other things considered – see below!)

Our model has been trained and tested on over 120,000 oocyte images and their outcomes – but size alone isn’t enough. The dataset needs to be representative of real-world data across multiple regions and clinics (see below “Diversity”) to ensure that the model can accurately predict outcomes when it is actually implemented in a new setting. It’s also important to include enough data (i.e., 1000+ images) for each clinical scenario for the model to pick up on nuances between regions, protocols and other variables.

DIVERSITY:

Data diversity is important to improve the model’s generalization and avoid what is known as “overfitting” the model. This includes diversity in terms of data sources, data types, and data distribution.

Overfitting arises when a model performs well on its training data but fails to generalize effectively to new data or exhibit relevance to real-world scenarios. It would be like memorizing the answers to a test instead of understanding the material properly to reason through an answer.

We ensure that our dataset includes a good mix of images representing real-world scenarios:

- • Different outcomes: Oocytes that formed a blastocyst, and oocytes that did not

- • Different countries and regions (and therefore populations): Our model has been trained on data from a variety of clinics (minimum 1000 per clinic/region) and institutions in eight countries across three continents.

- Studies like this example from Palacios et al. have shown differences in population reproductive health data across regions. It’s therefore important to represent different patient populations in our model’s training to help the model generalize to these types of regional variations.

- • Different lab equipment: Oocyte images look different depending on which type and model of equipment is capturing the image: a variety of microscopes and camera models, and time-lapse incubators.

- In integrating our technology with different lab equipment, we need to ensure that the model performs well in predicting outcomes from oocytes captured by different equipment types.

Our responsible approach to data sourcing ensures that we continue to grow the size and diversity of our dataset through the expansion of our data partnerships to clinics and institutions in new countries. This enables us to also capture images with different lab equipment and represent a variety of patient and donor populations.

QUALITY:

In an image classification model, it’s no surprise that the quality of the images training the model is crucial. The model also requires high-quality data labels (e.g., labelling the outcome, and various other clinical or demographic factors) to help it understand which types of data it’s analyzing. Labels should be accurate, consistent, complete, representative of the target distribution and unbiased to maximize the model’s performance.

Examples of our data labels include:

- • Outcomes: Gardner grade Blastocyst, Fertilization

- • Contributing sperm quality

- • Patient age

- • BMI

- • Protocol

Currently, only the oocyte images and their outcomes are used in our blastocyst prediction model. Patient age is layered on after the assessment to provide live birth predictions in our VIOLET™ reports to support patient counselling. We also proactively collect other relevant data labels in partnership with clinics, which enables us to develop new studies and insights to better understand correlations between a variety of factors and oocyte quality.

Just as a building needs a sturdy foundation to support its structure, a deep learning model needs high-quality data to support its predictions. If you train a deep learning model on poor-quality data, the model’s predictions will be unreliable and will fail when faced with new data.

Examples of factors impacting oocyte data quality include:

- • Image quality (e.g., in focus, correctly exposed, low noise, etc.)

- • Correctness of outcome labelling for data used to train the model

Our clinic partners can easily upload images and outcome data via our user-friendly applications that integrate directly with the lab’s image capture equipment. This collaborative effort to achieve high data quality underscores the importance of strong relationships between the model builder and the data partners.

To support our clinic partners, experienced embryologists on our Clinical Embryology team work hand-in-hand with labs to configure the image capture equipment and coach them on workflow best practices that ensure high-quality oocyte image capture.

To further ensure that labs are loading high-quality images, our software application conducts automatic quality assessment checks when images are loaded to our app. Lab teams receive immediate feedback in the app if the loaded images are blurry or noisy, providing an opportunity to capture new, improved images.

DATA HYGIENE:

Data quality serves as the foundation for effective data hygiene. It’s important to first establish data quality practices to ensure that data is accurate, reliable, and consistent before implementing data hygiene techniques to maintain data cleanliness and organization.

A key aspect of hygiene maintenance involves removing data duplicates, outliers, and irrelevant data. Having a large dataset where data is duplicated or not relevant to the model’s training is counterproductive.

Examples of hygiene maintenance on our dataset include:

- • Removing images of the same oocyte that have been captured twice by lab teams

- • Keeping eggs from the same retrieval cohort within a single dataset type (either training, validation or test sets). This ensures that the model isn’t “cheating” its test by seeing oocytes from the same retrieval cycle that it learned from in training, which may have similar characteristics to other oocytes from that cycle.

- • Labelling which oocytes are from donors vs patients, to ensure that the appropriate age is associated with the oocyte during research studies (i.e., If a 38-year-old patient receives a donated oocyte from a 31-year-old donor, then the age of the oocyte to be assessed is 31.)

BALANCE:

Collecting sufficient data from each outcome class is crucial for effective model learning. While a perfect 50/50 balance is not required, the dataset should include enough samples for each class to enable the model to learn relevant features and be representative of real-world outcomes, ensuring good generalization for clinical usage. Our dataset, for example, has about a 40/60 split between “blastocyst” and “no blastocyst” outcomes to mirror the real world results they are modelling.

Dataset balance enables the model to learn to recognize all classes equally, which helps to prevent biased predictions. Having a really large dataset isn’t useful if it isn’t properly balanced, as the model may learn to predict the majority class well and perform poorly in predicting the minority class.

For example, a dataset that is largely made up of images of oocytes that formed blastocysts will likely be better at predicting a positive outcome than a negative outcome because it’s focused its training too much on the aspects relevant only to blastocyst formation. It works the same way in our own brains – you will become more competent at completing tasks that you do more frequently than those that you rarely have to think through.

Dataset collection should also consider the distribution of other key data labels that may impact model performance. For example, we ensure that we have a good balance between different patient age groups as oocyte quality is known to generally decline with age.

ETHICAL CONSIDERATIONS:

A strong dataset should be collected and labelled in an ethical and responsible manner. It should also represent diverse populations to ensure that the model itself is not further perpetuating cultural biases. The methods of data collection as well as measures to ensure safe data storage and usage (i.e., de-identification) should be considered as part of the planning process before data collection begins.

Expanding our dataset size and diversification to new regions, patient and donor populations, and emerging use cases is a key part of our data strategy. As our model does not use any personal health information (PHI) for training, our solutions are also HIPAA- and GDPR-compliant.

All of the above characteristics are important to consider when building or evaluating a model’s training dataset. Focusing on individual elements in isolation can lead to gaps in generalizing the model to real-world clinical scenarios. As such, when you are evaluating different AI solutions for your clinic, be sure to get the full picture and avoid being swayed by marketing claims that focus only on one element. A great starting point is to ask about the dataset that the model was trained on and the specific problem that the model is solving for.

Future Fertility’s approaches to data strategy, data collection and new partnerships consider all angles, enabling us to work hand-in-hand with clinics and institutions on a global scale to establish a high-performing model that translates into practical application with clinical value, leveraging best-in-class AI data practices.

Stay tuned for our next installment in this series, where we’ll help you understand how a neural network is built, and what considerations are important in developing a solution for oocyte image assessment.

You Might Also Like …

Join our mailing list for dispatches on the future of fertility